Action Recognition in Temporally Untrimmed Videos

Year: 2015

Authors

Bryan Anenberg and Norman Yu

Abstract

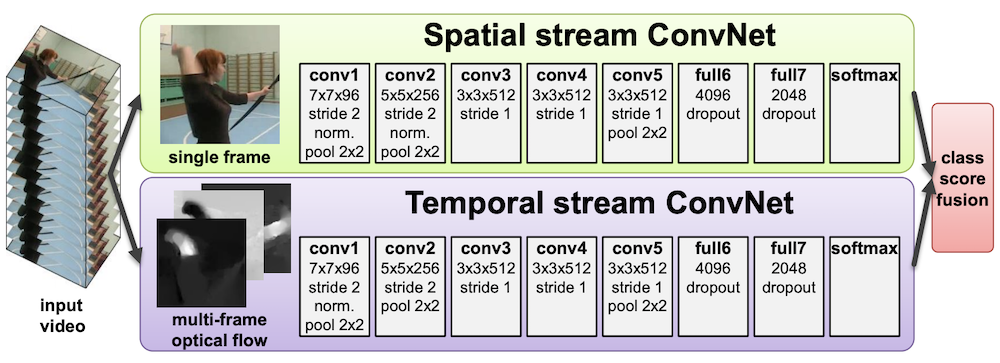

We investigate strategies to apply Convolutional Neural Networks (CNNs) to recognize activities in temporally untrimmed videos. We consider techniques to segment a video into scenes in order to filter frames relevant to the activity from the background and irrelevant frames. We evaluate CNNs trained on frames sampled from the videos and present an approach towards implementing the two-stream CNN architecture outlined in Simonyan et al. The system is trained and evaluated on the THUMOS Challenge 2014 data set, which is comprised of the UCF-101 and additional temporally untrimmed videos.

Resources

Technologies:

- Caffe

- Python