Tree LSTMs for Quiz Bowl Question Answering

Year: 2015

Authors

Bryan Anenberg, Albert Chen

Abstract

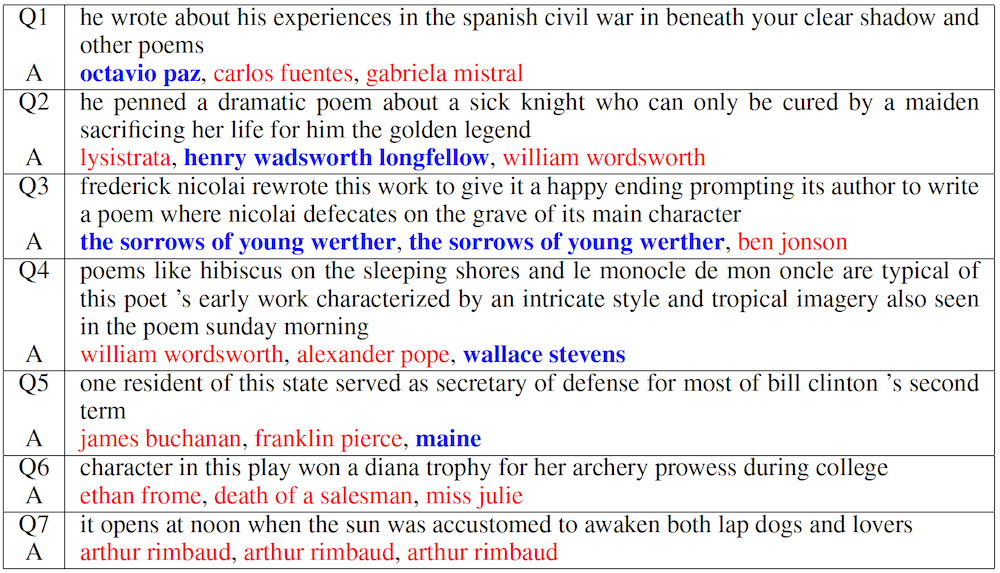

Automatic question answering is a difficult task in NLP, because the model must learn to understand not only words but phrases. For this reason, bag-of-words models do not work so well, but recursive neural networks based on sentence parse trees have shown more promise. Here we extend the work of Iyyer et al., who used a dependency tree RNN model (called QANTA) to answer quiz bowl questions. We apply the recently developed Tree LSTM model to the task, and show that it achieves better accuracy by 3% on history and literature questions. Furthermore, we introduce a Tree LSTM Tensor model to flexibly incorporate dependency relation information, and find that although it has better performance than QANTA on the literature set, the Tree LSTM model has the best performance overall.

Resources

Figures

Technologies

- Python

- Numpy